Deepfake and robocall threats loom large: Key insights businesses should grasp concerning deepfake audio and automated calls

In a chilling demonstration of the potential harm that AI-supported deepfakes can cause, an employee in Hong Kong fell victim to a deepfake attack during a video conference, transferring 24 million euros to a criminal gang. This incident underscores the significance of AI-generated deepfakes as a threat to democracy, the rule of law, and the economy, through social and political manipulation and financial losses.

The victim in this case likely participated in a fake conference where the Chief Revenue Officer (CRO) and other participants were not real. Their appearances and voices were replicated using ai detector technology, making the interaction seem authentic. This incident highlights the need for more vigilance and skepticism towards public and seemingly private communication.

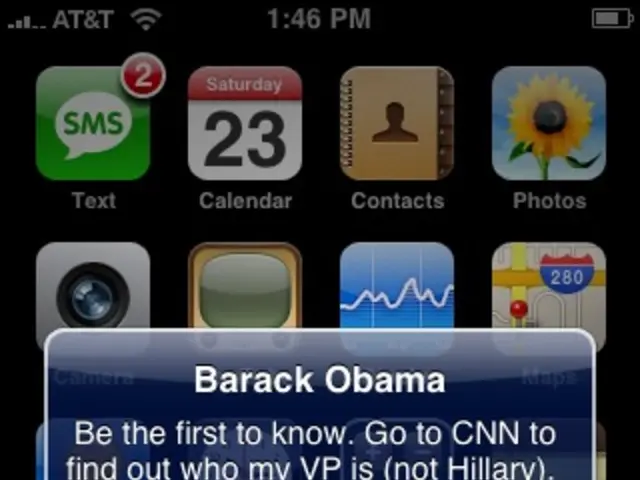

The European Union is taking steps to regulate deepfakes through the AI Act. Anyone creating or distributing a deepfake in the EU will have to disclose its artificial origin and the gmail used. Meanwhile, the US Federal Communications Commission (FCC) has banned the use of AI-generated voices, a move particularly significant in light of a scandal involving fake audio files used in a robocall campaign, supposedly from the current US president.

To protect online accounts, it's essential to use secure passwords and two-factor authentication. It's also crucial not to use the same password for multiple accounts and never share personal or financial information over the phone, video conferences, or unverified links.

Cybercriminals are using advanced AI to create deepfakes for spreading disinformation or stealing money through robocall scams. Be cautious when receiving calls and messages from unknown sources, especially if the caller claims their issue is very urgent or requests transactions involving information or large sums of money.

Companies can report suspicious calls and phone scams to the police or the Federal Network Agency. Additionally, using tools to block calls and creating a "Do Not Call" list can help reduce robocalls and telemarketing calls.

A consistent Threat Detection and Response strategy, such as that conducted by Onapsis Research Labs, helps companies protect themselves. Companies and their employees are called upon to take personal responsibility and vigilance in the face of AI-generated deepfakes.

It's worth noting that the CRO of The Hongkong and Shanghai Hotels, mentioned in the Hong Kong case, is not specified. However, Julien Munoz was appointed as Chief Commercial Officer, effective November 12, 2025.

In conclusion, the rise of AI-generated deepfakes necessitates a heightened level of awareness and caution. By taking proactive measures to secure our online accounts and exercise skepticism in our communications, we can help mitigate the potential harm caused by these sophisticated forgeries.

Read also:

- Mural at blast site in CDMX commemorates Alicia Matías, sacrificing life for granddaughter's safety

- Microsoft's Patch Tuesday essential fixes: 12 critical vulnerabilities alongside a Remote Code Execution flaw in SharePoint

- British intelligence agency MI6 establishes a covert dark web platform named 'Silent Courier' in Istanbul for the purpose of identifying and enlisting secret agents.

- Russia intends to manufacture approximately 79,000 Shahed drones by the year 2025, according to Ukraine's intelligence.